-

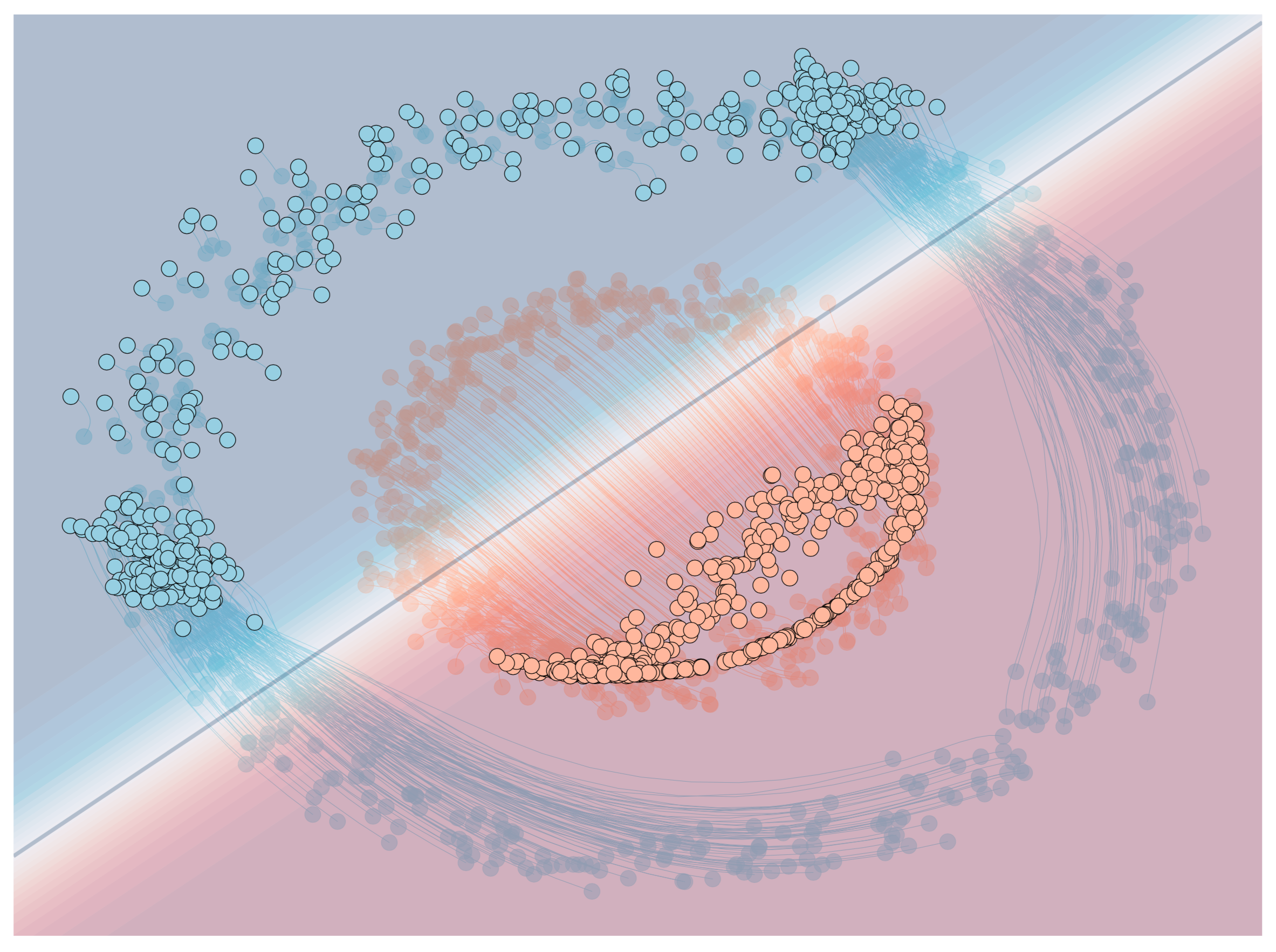

Animated Local Explanations

Explainability is a part of machine learning is where we try to demystify the factors that influence a particular model prediction, particularly when the predictive functions are complex and nonlinear. In a typical paper or a blog that you may read, you'll see explanations visualised in a nice static image. I was recently after reading a paper and afterwards was inspired to produce animations of explanations, as well as a few additional insights.

-

Eager exception logging in Python

Recently I've been spending more time introducing best practise with Python, and particularly I have started logging. I've actually become quite positive about logging and have (several times) found it vital to debugging the errors in my code. I did find in several occasions that there was a particular pattern that was missing in the intersection of logging and exception handling that I've come to think of as 'eager exception logging'. The key difference to this and other exception patterns is that I want to raise and log the exception at the same time.

-

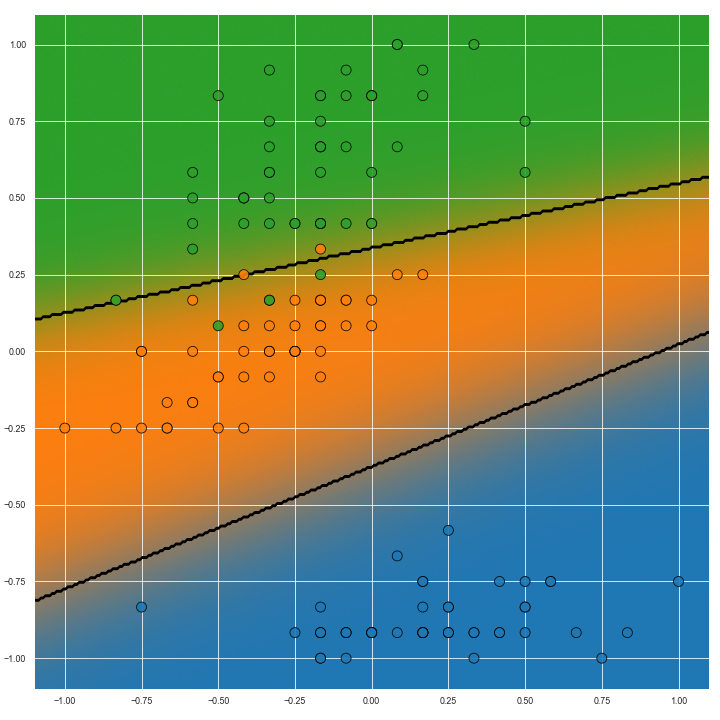

Visualising confidence in multi-class classification problems

It is best practise to visualise the confidence of predictive models whenever possible. With binary classification in two dimensions this is particularly straightforward since confidence that is lost from one class is necessarily gained by the other. With more classes the visualisation becomes less straightforward: confidence can be lost to any combination of classes and to each at different rates. Voronoi diagrams are a popular way of displaying multi-class predictions, but unfortunately these do not directly show predictive confidence. In this post I briefly describe and give code for a method that embeds multi-class confidence in predictive diagrams.

-

Bringing focus back to your inbox

I realised recently that my primary email account is barely fulfilling its purpose anymore. The main cause: over-subscription has devalued the utility of the basic service. In other words, since the majority of the emails I received have basically zero value, I give much less attention to those that are actually valuable. I decided to take action and brutally cull and unsubscribe from all but the most important or valuable services. The main problem with this decision is that I am fairly lazy so wanted to do as little manual labor as possible. Since I'm also rather forgetful and will surely need to do this again in the future I'm really hoping that this post will help the future me!

-

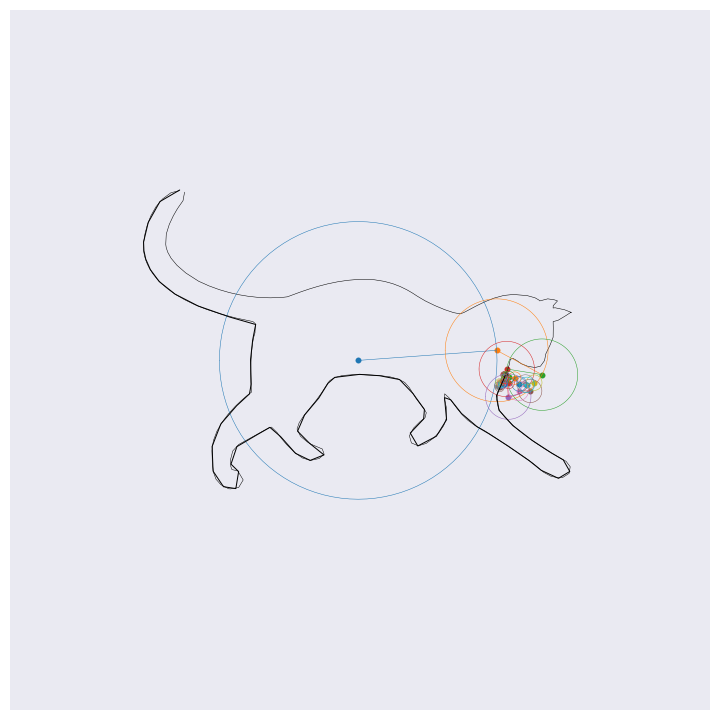

Taking the Fourier transform of your cat

There are a few YouTube channels that I watch fairly regularly that have recently been making some truly beautiful visualisations of the Fourier transform. The visualisations really were beautiful (I'll link to them in the text) not only aesthetically but also in how they intuitively show what the Fourier transform works and what it achieves. I was inspired to try to replicate the visualisation procedure and the code inside this post is a python implementation of the method. The approach itself in truth isn't terribly complicated, but I find the outcome hypnotic!

-

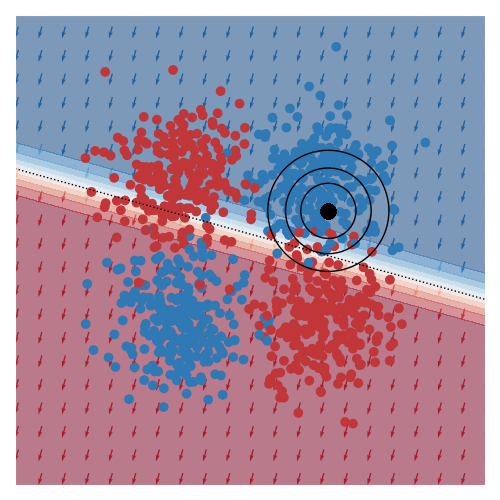

Neural ODEs with stochastic vector field mixtures

It was recently shown that neural ordinary differential equation models cannot solve fundamental and seemingly straightforward tasks even with high-capacity vector field representations. This paper introduces two other fundamental tasks to the set that baseline methods cannot solve, and proposes mixtures of stochastic vector fields as a model class that is capable of solving these essential problems. Dynamic vector field selection is of critical importance for our model, and our approach is to propagate component uncertainty over the integration interval with a technique based on forward filtering. We also formalise several loss functions that encourage desirable properties on the trajectory paths, and of particular interest are those that directly encourage fewer expected function evaluations. Experimentally, we demonstrate that our model class is capable of capturing the natural dynamics of human behaviour; a notoriously volatile application area. Baseline approaches cannot adequately model this problem.